Key Takeaways:

I. Gemini 2.0's multimodal architecture and improved performance benchmarks position it as a strong competitor in the LLM arena.

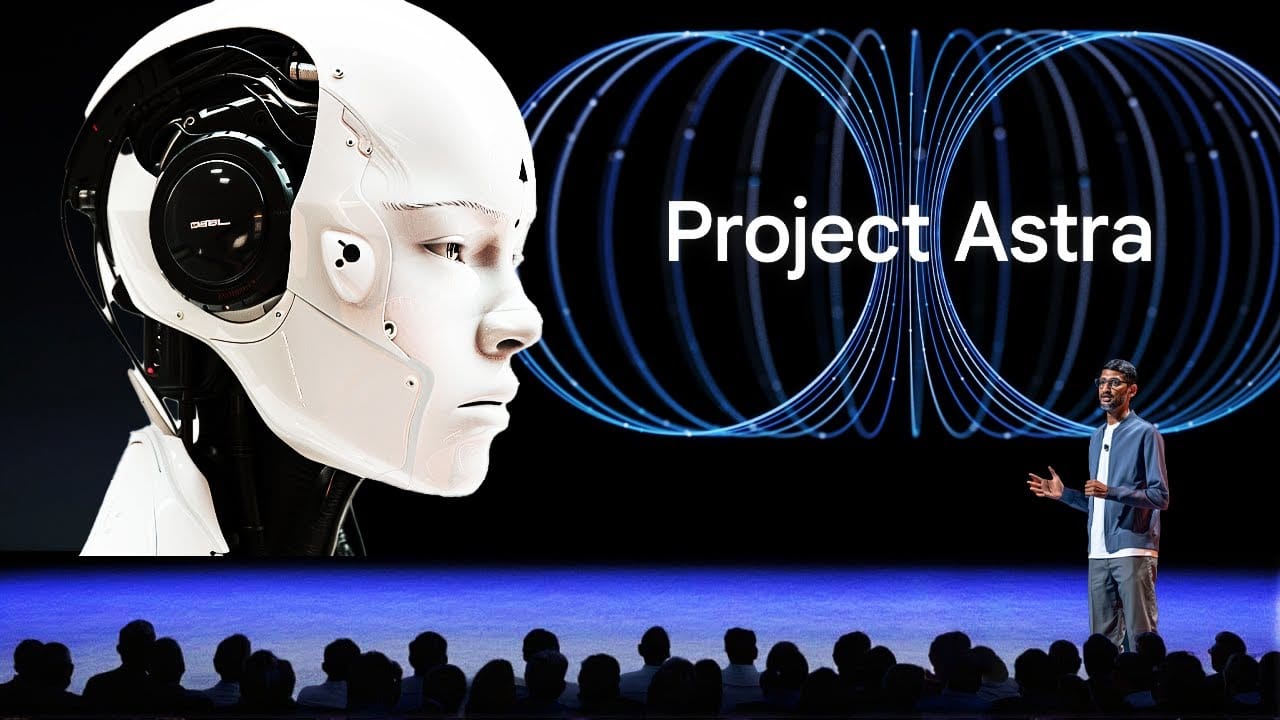

II. Project Astra's integration with Google's ecosystem offers a compelling vision for universal AI assistance, but its success hinges on addressing technical and ethical challenges.

III. The development and deployment of advanced AI systems like Gemini 2.0 and Astra necessitate a responsible approach, considering potential societal impacts and prioritizing ethical guidelines.

Google DeepMind has unveiled a suite of AI advancements, headlined by Gemini 2.0, a significantly faster and more capable multimodal large language model (LLM), and Project Astra, an experimental universal AI assistant. Gemini 2.0's enhanced capabilities power Astra's ability to interact with users through text, speech, image, and video, integrating seamlessly with existing Google services like Search, Maps, and Lens. While early demonstrations of Astra showcase its potential, they also reveal limitations, highlighting the challenges of building truly versatile AI assistants. This article provides a pragmatic assessment of these innovations, exploring their technical underpinnings, strategic implications, and potential impact on the future of AI.

Under the Hood: A Technical Analysis of Gemini 2.0

Gemini 2.0's core innovation lies in its unified multimodal architecture. This architecture allows it to seamlessly process and integrate information from diverse sources, including text, code, images, and, in future iterations, audio and video. This is achieved through powerful transformer networks trained on massive, proprietary datasets derived from Google's extensive suite of services. The architecture incorporates specialized sub-modules for different tasks, enabling fine-tuning and optimization in specific areas like factual language understanding, commonsense reasoning, and even humor generation. This modularity contrasts with the decoder-only architecture of models like GPT-4, offering greater flexibility and potential for specialized performance gains.

Benchmark comparisons demonstrate Gemini 2.0's competitive advantage. On the Massive Multitask Language Understanding (MMLU) benchmark, Gemini Ultra achieves 90.04% accuracy, surpassing GPT-4's 87.29%. It also leads in the DROP reading comprehension benchmark with the highest F1 score. These results highlight Gemini 2.0's strength in both factual knowledge and nuanced language understanding. Furthermore, Gemini 2.0 significantly outperforms GPT-4 in multimodal benchmarks, showcasing the effectiveness of its integrated architecture.

Beyond performance, Gemini 2.0's efficiency is a key differentiator. Variants like Gemini Nano, with its 20 billion parameters, are optimized for on-device deployment. This enables powerful AI capabilities on resource-constrained devices like smartphones, contrasting with the substantial computational resources required by larger models like GPT-4 and Gemini Ultra, which are primarily suited for data centers and cloud-based applications. This focus on efficiency expands the potential applications of Gemini 2.0 across a wider range of devices and use cases.

Despite its advancements, Gemini 2.0 is not without its challenges. The vision model currently underperforms compared to its text processing capabilities, presenting an area for future development. Ensuring fairness and mitigating biases inherent in training data require ongoing research and robust mitigation strategies. The large-scale deployment of such powerful models also raises ethical considerations, including responsible use and the potential for misuse. These challenges necessitate a careful and responsible approach to development and deployment, balancing innovation with ethical considerations.

Astra: The Universal AI Assistant - Promise and Potential

Project Astra embodies Google's vision for a truly universal AI assistant. It seamlessly integrates with existing Google services, including Search, Maps, and Lens, to provide a unified interface for accessing information and completing tasks. Astra interacts with users through text, speech, image, and video, leveraging Google's vast knowledge base and contextual understanding to provide relevant and helpful responses. This integrated approach aims to create a more intuitive and efficient user experience, contrasting with the fragmented nature of current AI assistants.

Astra's strategic importance lies in its potential to transform how users interact with technology. By consolidating access to various Google services, Astra simplifies complex workflows and streamlines daily tasks. Imagine effortlessly scheduling appointments, managing emails, navigating maps, and conducting image searches, all through a single, natural language interface. This enhanced user experience could significantly increase user engagement with Google's ecosystem, strengthening its competitive position in the rapidly evolving AI landscape.

However, the development and deployment of Astra present significant challenges. Ensuring seamless interoperability between different Google services and maintaining data consistency across platforms require robust engineering and careful planning. Addressing privacy concerns, particularly with Astra's access to sensitive user data through multiple modalities, is paramount. The potential for errors and unexpected behavior in such a complex system necessitates rigorous testing and continuous monitoring.

Early demonstrations of Astra have provided a glimpse into its potential while also revealing limitations. While Astra has shown impressive capabilities in understanding and responding to complex requests, it has also exhibited glitches and inaccuracies, highlighting the ongoing development process. These early findings underscore the need for continuous improvement and refinement, focusing on addressing edge cases and improving the system's robustness and reliability.

AI and Society: Balancing Innovation with Ethical Considerations

The development and deployment of powerful AI systems like Gemini 2.0 and Astra raise important ethical considerations. One major concern is the potential for AI bias, stemming from biases present in the vast datasets used for training. These biases can manifest in various ways, leading to unfair or discriminatory outcomes. Mitigating these biases requires careful curation of training data, rigorous testing for bias, and the development of techniques for detecting and correcting biased outputs. Transparency in data handling practices and algorithmic decision-making is also crucial for building user trust and ensuring accountability.

Another key concern is the societal impact of these technologies. While AI assistants like Astra can enhance productivity and accessibility, they also pose risks, such as job displacement and the exacerbation of existing inequalities. Projections indicate a potential shift in skill demand, with increasing demand for technological skills and decreasing demand for basic cognitive skills. Addressing these societal challenges requires proactive strategies, including reskilling and upskilling initiatives, policy interventions, and ongoing dialogue between researchers, policymakers, and the public.

The Future of AI: A Call for Responsible Innovation

Gemini 2.0 and Project Astra represent a significant leap forward in the field of AI, showcasing the potential of multimodal models and universal AI assistants to transform how we interact with technology. However, the journey towards realizing this potential is fraught with challenges, both technical and ethical. The future of AI depends not only on continued innovation but also on a commitment to responsible development, ethical considerations, and proactive strategies to mitigate potential societal impacts. By navigating these challenges thoughtfully and collaboratively, we can shape a future where AI serves as a powerful tool for progress and human betterment.

----------

Further Reads

I. https://blog.getbind.co/2024/01/07/google-gemini-pro-vs-gpt-4-llm-models/Gemini Pro vs GPT-4: An in-depth comparison of LLM models

II. https://medium.com/@getbind.co/google-gemini-vs-gpt-4-which-is-the-best-llm-model-574bf2d61971Gemini Pro vs GPT-4 : Which is the best LLM model? | by Bind AI | Medium

III. https://engineering.mercari.com/en/blog/entry/20210623-5-core-challenges-in-multimodal-machine-learning/5 Core Challenges In Multimodal Machine Learning | Mercari Engineering